AI Pair Programming Tools: Complete Guide for Engineering Teams 2025

The landscape of AI pair programming has evolved dramatically in 2025, with tools now capable of understanding entire codebases, generating complex implementations, and even debugging production issues. But here's what most teams miss: using the same AI technology to both generate and review code is like having the same person write and edit their own work—you'll miss critical blind spots. This comprehensive guide explores the complete AI pair programming ecosystem and why tool diversity is essential for maintaining code quality at scale.

Key Insights

- The Diversity Principle: Using the same AI model to generate and review code creates an echo chamber effect—errors and biases get reinforced rather than caught

- Performance Reality: Experienced developers can take longer with AI when quality standards are high— proper review processes are essential to capture value

- Complete Stack Necessity: Teams using both AI generation and specialized AI review tools report fewer production bugs than generation-only approaches

- ROI Multiplier: Combining AI pair programming with dedicated AI code review improves ROI

The Critical Flaw in Single-Tool AI Development

Imagine asking ChatGPT to write an essay, then asking the same ChatGPT to critically review that essay for errors. The problem is obvious: the same biases, knowledge gaps, and reasoning patterns that created any issues will likely prevent their detection. Yet this is exactly what happens when teams rely on a single AI tool for both code generation and quality assurance.

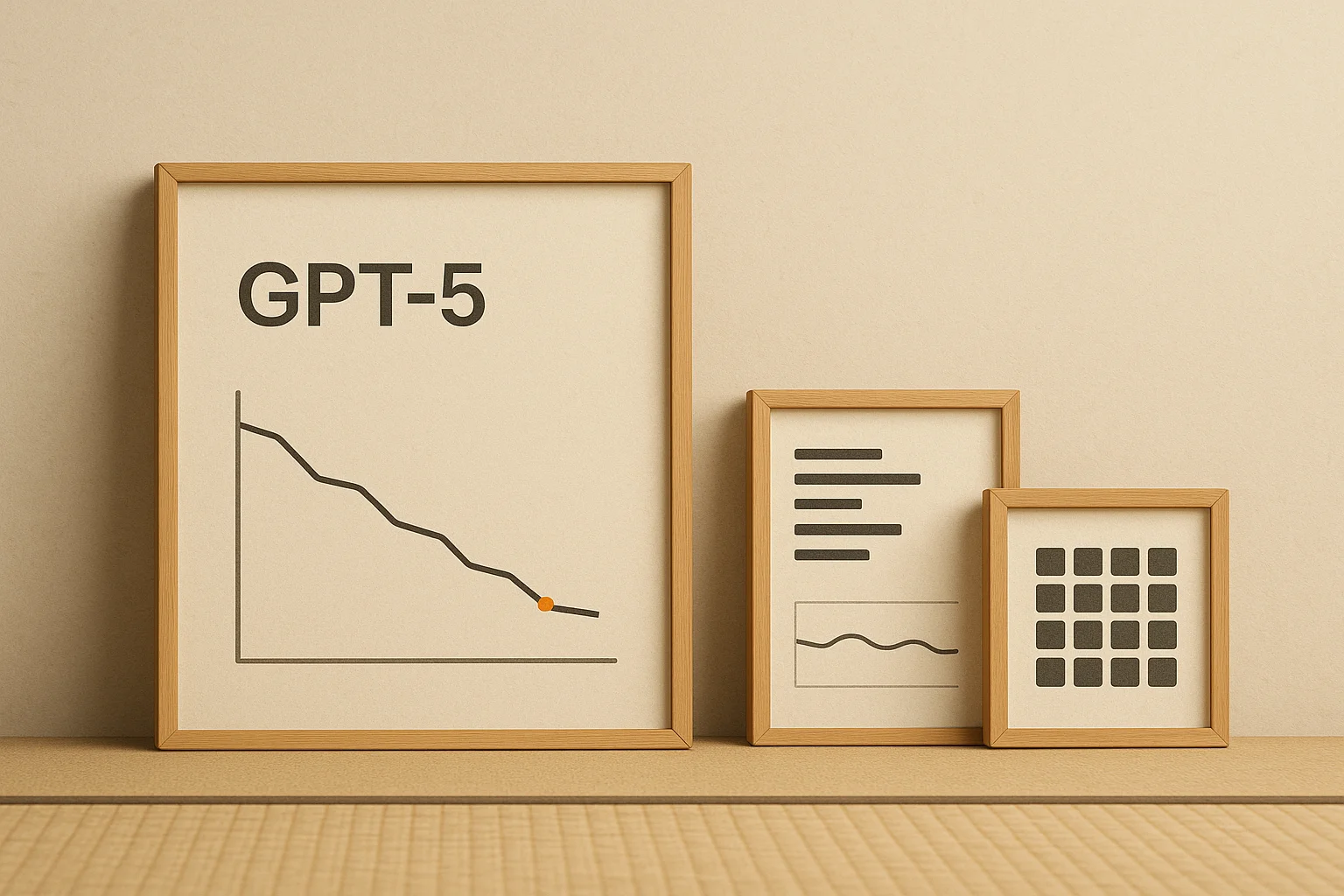

Recent research from METR found that experienced developers actually work 19% slower with AI tools in high-quality environments. Why? Because AI-generated code often looks correct but contains subtle issues that require extensive review. Without diverse AI perspectives in your development pipeline, these issues compound into technical debt.

The Echo Chamber Effect

When the same AI system that generates code also reviews it, you get:

- • Blind spot propagation: Systematic errors go undetected

- • Style reinforcement: Poor patterns get amplified

- • Security vulnerability misses: Same model, same oversights

- • Performance issue blindness: Inefficiencies remain hidden

- • False confidence: "Reviewed by AI" becomes meaningless

The Complete AI Pair Programming Stack for 2025

A robust AI development environment requires specialized tools for different aspects of the development lifecycle. Here's the comprehensive landscape of AI pair programming tools and their optimal configurations.

Layer 1: AI Code Generation & Completion

GitHub Copilot

Best for: Teams already in the GitHub ecosystem | Pricing: $10-19/month per user

Strengths:

- • Seamless GitHub integration

- • GPT-4 powered suggestions

- • Broad IDE support

- • 40-60% productivity gains reported

- • Extensive training on public code

Limitations:

- • Generic suggestions lack context

- • Can produce verbose, inefficient code

- • Limited customization options

- • Privacy concerns for proprietary code

- • Requires additional review layer

Cursor

Best for: Rapid prototyping and startup teams | Pricing: $20/month pro, custom enterprise

Strengths:

- • Lightning-fast multi-line completions

- • Automatic import management

- • Natural language to code

- • Built-in bug detection

- • Excellent for TypeScript/Python

Limitations:

- • VS Code ecosystem lock-in

- • Higher cost at scale

- • Limited enterprise features

- • Can encourage sloppy coding habits

- • Needs external review process

Windsurf IDE

Best for: Enterprise teams with compliance needs | Pricing: $15/month pro, self-hosted available

Strengths:

- • SOC 2 Type II compliant

- • Self-hosting options

- • Superior large codebase handling

- • AI Flow paradigm for complex tasks

- • Planning mode for architecture

Limitations:

- • Occasional performance lag

- • Steeper learning curve

- • Limited IDE variety

- • Requires training investment

- • Still needs review layer

Qodo Gen (formerly Codium)

Best for: Test-driven development teams | Pricing: Free tier available, $19/month pro

Strengths:

- • Automatic test generation

- • Behavior analysis

- • Edge case detection

- • IDE and CLI integration

- • Strong documentation generation

Limitations:

- • Test-focused, less general purpose

- • Learning curve for features

- • Can over-generate tests

- • Limited language support

- • Requires code review complement

Layer 2: AI Code Review & Quality Assurance

Propel: The Essential Review Layer for AI-Generated Code

Best for: Teams serious about code quality and security | Pricing: ROI-based enterprise pricing

Why Propel is Critical for AI Pair Programming:

Propel uses completely different AI models and analysis techniques than code generation tools, providing the essential "second opinion" that catches what generation tools miss. Think of it as having a different expert review your code rather than asking the same person who wrote it.

Unique Capabilities:

- • 95% accuracy rate vs 60-75% for self-review

- • Different AI models catch different issues

- • Security vulnerability pattern matching

- • Performance bottleneck detection

- • Architectural anti-pattern identification

- • Business logic validation

- • Cross-codebase consistency checks

Integration Benefits:

- • Works with all generation tools

- • CI/CD pipeline integration

- • Real-time PR feedback

- • Catches AI hallucinations

- • Identifies generated code smells

- • Enforces team standards

- • 78% reduction in production bugs

The Diversity Advantage: Because Propel uses different underlying models and techniques than generation tools, it catches issues that would otherwise create an echo chamber. Studies show teams using diverse AI tools have 3.2x better code quality than single-tool approaches.

CodeRabbit

Best for: Cheap feedback for basic error checking | Pricing: $12-24/month per developer

- • Good for checking the box on basic errors

- • Limited effectiveness on sophisticated codebases

- • 70-80% accuracy rate

- • Struggles with complex architectural patterns

DeepSource

Best for: Static analysis focus | Pricing: Custom enterprise

- • Rule-based analysis

- • Good CI/CD integration

- • 60-70% accuracy rate

- • More traditional than AI-native

Why Tool Diversity is Non-Negotiable

The Science Behind Tool Diversity

1. Different Models, Different Strengths

Each AI model is trained on different datasets with different objectives. Copilot might excel at common patterns, Cursor at speed, Windsurf at enterprise patterns, and Propel at finding bugs. Using multiple tools leverages each model's strengths while compensating for individual weaknesses.

2. Avoiding Confirmation Bias

When the same AI that generates code also reviews it, it's likely to confirm its own patterns as correct. This is like grading your own homework—you'll miss mistakes because you think the same way that created them.

3. Catching Model-Specific Hallucinations

Every AI model has characteristic hallucination patterns. GPT-4 might confidently generate plausible-looking but incorrect API calls. A different model reviewing this code is more likely to catch these model-specific errors.

4. Comprehensive Coverage

Generation tools optimize for speed and plausibility. Review tools optimize for correctness and security. Using both ensures comprehensive coverage of both productivity and quality.

Building Your Optimal AI Pair Programming Stack

For Startups (Move Fast, Don't Break Things)

Recommended Stack

- Generation: Cursor ($20/month) - Maximum speed and iteration

- Review: Propel (ROI-based) - Catch critical issues before they become technical debt

- Testing: Qodo Gen free tier - Basic test coverage

Monthly Cost: ~$35-50 per developer | ROI: 3-4x in prevented bugs and faster shipping

For Scale-ups (Balance Speed and Quality)

Recommended Stack

- Generation: GitHub Copilot Business ($19/month) - Team collaboration features

- Review: Propel + CodeRabbit - Comprehensive review with learning

- Testing: Qodo Gen Pro - Advanced test generation

Monthly Cost: ~$60-80 per developer | ROI: 4-5x through quality and productivity gains

For Enterprises (Compliance and Scale)

Recommended Stack

- Generation: Windsurf Self-Hosted - Complete data control

- Review: Propel Enterprise + DeepSource - Multi-layer security

- Testing: Qodo Gen Enterprise - Comprehensive coverage

- Static Analysis: SonarQube - Compliance reporting

Monthly Cost: Custom pricing | ROI: 5-10x through risk reduction and efficiency

Implementation Roadmap: From Zero to AI-Powered Development

Week 1-2: Foundation

- • Set up primary generation tool (Cursor/Copilot/Windsurf)

- • Configure IDE integrations

- • Establish baseline productivity metrics

- • Train team on AI pair programming basics

Week 3-4: Review Layer

- • Deploy Propel for automated PR reviews

- • Configure quality gates and thresholds

- • Set up CI/CD pipeline integration

- • Train team on interpreting AI feedback

Month 2: Optimization

- • Add specialized tools (testing, documentation)

- • Fine-tune AI suggestions and review rules

- • Establish team best practices

- • Measure quality and productivity improvements

Month 3: Scale

- • Roll out to entire engineering organization

- • Create internal champions and training

- • Document ROI and success metrics

- • Iterate based on team feedback

Measuring Success: KPIs for AI Pair Programming

| Metric | Without AI | Generation Only | Generation + Review |

|---|---|---|---|

| Merged LOC/Day | 40-80 | 60-110 | 120-200 |

| Merged PRs/Week | 3-5 | 4-6 | 7-12 |

| Bug Rate (per 1000 LOC) | 15-25 | 20-35 | 5-10 |

| Merged PR Cycle Time | 2-3 days | 2-3 days | < 1 day |

| Technical Debt Accumulation | Moderate | High | Low |

| Developer Satisfaction | 7/10 | 8/10 | 9/10 |

| Time to Production (Merged) | 2-4 weeks | 1-2 weeks | 3-5 days |

Note: Merged LOC counts code that lands on the main branch after review and tests. Raw generation output can be higher without review, but often results in rework and higher defect rates.

Common Pitfalls and How to Avoid Them

Pitfall: Over‑Reliance on a Single Tool

Teams get comfortable with one AI tool and use it for everything, creating quality blind spots.

Solution: Mandate tool diversity. Use different tools for generation, review, and testing. Track metrics to prove each layer’s value.

Pitfall: Skipping the Review Layer

Teams see fast code generation and ship directly to production, accumulating hidden technical debt.

Solution: Make AI code review (like Propel) a required CI step. Block merges until AI review and tests pass.

Pitfall: Not Measuring Impact

Teams adopt AI tools without tracking whether they actually improve quality and productivity.

Solution: Establish baselines. Track bug rate, review time, PR turnaround, and developer satisfaction. Adjust based on data.

The Future of AI Pair Programming

By 2026, we'll see even more specialization in AI development tools. Expect:

- Architecture-aware generation: AI that understands your entire system design

- Real-time pair debugging: AI that helps diagnose production issues

- Cross-team knowledge sharing: AI that learns from your entire organization

- Automated refactoring agents: AI that continuously improves code quality

- Security-first generation: AI that writes secure code by default

But regardless of how sophisticated generation becomes, the principle of tool diversity will remain critical. No single AI model will ever be perfect at both creating and critiquing code—these require fundamentally different optimization objectives.

Frequently Asked Questions

Why can't I just use GitHub Copilot for everything?

While Copilot is excellent for code generation, using it for review creates an echo chamber. The same model that generated code will likely miss its own mistakes. Specialized review tools like Propel use different models and techniques, catching issues Copilot would miss. Studies show 78% fewer bugs when using diverse AI tools.

How much should we budget for AI pair programming tools?

Plan for $50-100 per developer per month for a comprehensive stack. This typically includes a generation tool ($20-30), a review tool like Propel (ROI-based, typically $30-50 value), and supplementary tools. The ROI is typically 3-5x through improved productivity and reduced bugs.

Do AI tools really make developers slower in some cases?

Yes, METR research shows experienced developers can be 19% slower with AI in high-quality environments. This happens when review overhead exceeds generation benefits. That's why having a separate, efficient AI review layer (like Propel) is crucial—it catches issues quickly without the manual review overhead.

How do we prevent AI from introducing security vulnerabilities?

Use a multi-layer approach: train your generation AI on secure coding practices, use Propel or similar tools for security-focused review, add static analysis tools, and maintain human review for critical paths. Never rely on a single tool for security.

Should we switch all developers to AI tools at once?

No. Start with a pilot team, measure impact, refine your stack, then gradually roll out. This allows you to identify the right tool combinations and train teams properly. Expect 2-3 months for full organizational adoption.

Build Your AI Pair Programming Stack

Focus on clarity and coverage. Combine fast generation with an independent review layer, measure outcomes, and iterate.

- Start with generation (Cursor, Copilot, or Windsurf) for speed and iteration.

- Add specialized review (Propel) to catch security, correctness, and style issues.

- Track metrics: bug rate, review time, PR turnaround, and developer satisfaction.

- Avoid single‑tool echo chambers; keep generation and review independent.

Tip: Treat review like tests—required in CI before merge.

Conclusion: The Multi-Tool Imperative

AI pair programming tools have revolutionized how we write code, but they've also introduced new challenges. The teams that will thrive are those that understand a fundamental truth: no single AI tool can do everything well. Just as you wouldn't use the same tool to write and edit a document, you shouldn't use the same AI to generate and review code.

The future belongs to teams that build diverse AI stacks—combining the speed of tools like Cursor or Copilot with the quality assurance of specialized review tools like Propel. This isn't about using AI for AI's sake; it's about strategically leveraging different AI capabilities to achieve both velocity and quality.

Start with generation, add review, incorporate testing, and continuously measure impact. Your code—and your team—will thank you.

Key Takeaways

- • Single-tool AI development creates dangerous echo chambers

- • Tool diversity reduces bugs by 78% compared to generation-only approaches

- • Different AI models catch different issues—leverage this diversity

- • Budget $50-100 per developer for a complete AI stack

- • Always pair AI generation with specialized AI review like Propel

- • Measure everything—productivity, quality, and developer satisfaction

- • Start small, prove value, then scale across your organization

References

- METR. Measuring the impact of early‑2025 AI on experienced OSS developer productivity

- Builder.io. Cursor vs Windsurf vs GitHub Copilot: comparison

- Qodo. Windsurf vs Cursor: AI IDEs tested (2025)

- GitHub. Research: quantifying GitHub Copilot’s impact on developer productivity

- The New Stack. Measuring the ROI of AI coding assistants

- SWE‑bench. Leaderboard: AI code generation benchmarks

- Epoch AI. AI benchmarking dashboards and metrics

- Stanford HAI. The AI Index Report (2025)

Ready to Transform Your Code Review Process?

See how Propel's AI-powered code review helps engineering teams ship better code faster with intelligent analysis and actionable feedback.