Defeating Nondeterminism in LLM Inference: What It Unlocks for Engineering Teams

Engineers feel nondeterminism every day: the same prompt sometimes yields slightly different outputs, tests flake, benchmarks wobble, and bug reports are hard to reproduce. If we achieve deterministic LLM inference at scale, everyday engineering gets simpler and safer. This post explains why and what it unlocks—without assuming you’re an AI specialist.

First, what causes nondeterminism today?

Two broad sources: stochastic decoding choices and numerical implementation details. Even with a fixed random seed, parallel math on GPUs can sum numbers in different orders, and floating point operations are not associative. Kernel choices, scheduling, quantization, and low‑level libraries can all introduce tiny numeric differences that cascade into token choices. Meanwhile, temperature/top‑p sampling intentionally adds randomness during generation.

Why defeating nondeterminism matters

If model outputs are bit‑for‑bit repeatable for a given input and config, you can treat LLMs like any other deterministic service. That unlocks powerful, boring engineering tools.

GPU-level nondeterminism explained (brief)

GPUs run massive parallel math. Small numeric differences can appear when operations execute in different orders or use different kernels, and floating point addition isn’t associative. In practice, that means two runs can produce slightly different logits, which can flip the next token choice and cascade. Common sources:

- Parallel reductions reorder sums across threads/warps → different rounding.

- Float atomics (e.g., atomicAdd) resolve in arbitrary order → non-repeatable totals.

- Library autotuning picks different kernels (cuDNN/cuBLAS) based on heuristics.

- Scheduler/asynchrony changes block/stream completion order between runs.

- Mixed precision and math modes (TF32/FP16/BF16, FMA contraction, flush-to-zero).

- Multi-GPU collectives (NCCL) use different reduction trees/rings by topology.

1) Reproducible tests and “golden” snapshots

You can snapshot expected outputs for prompts (goldens) and run them in CI like any other regression test. When a dependency, prompt, or model version changes, diffs become crisp and actionable. No more re‑running a test five times to decide whether it flaked or regressed.

2) Reliable benchmarks and A/B experiments

Determinism collapses noise. You can attribute changes in accuracy, latency, or cost to actual code or prompt changes rather than sampling variance. That lowers the sample size and time needed to detect real effects, speeding up model and prompt iteration.

3) Safer rollbacks and on‑call debugging

When incidents happen, deterministic record/replay is a superpower. Given a request trace, you can reproduce the failure locally and verify the fix before rolling forward. If you roll back a model or prompt, you can be confident customers will see the same (previously good) outputs.

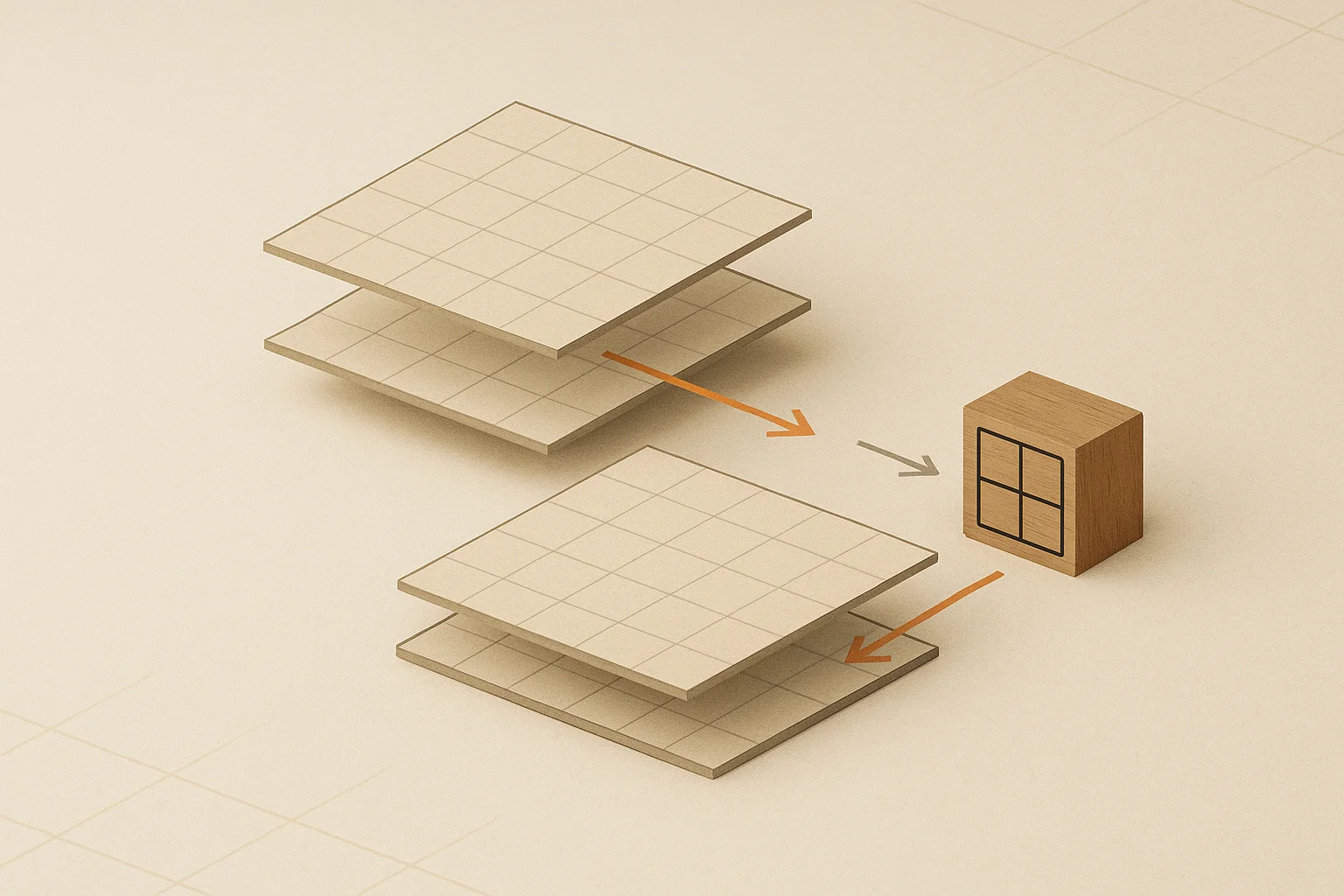

4) Aggressive caching and cost control

With stable outputs, content‑addressable caching becomes practical: key caches by a hash of inputs (prompt, tool calls, retrieved context) and reuse results across requests, regions, and deployments. This cuts inference spend and smooths tail latency without risking unexpected output drift.

5) Stronger compliance and auditability

Regulated workflows (financial advice, healthcare, legal summaries) need traceability. If a specific configuration always yields the same answer, reviewers can sign off on versions, store approvals, and later prove exactly what the system would have returned.

6) Cleaner developer experience

Docs, demos, and tutorials stay consistent. Agent pipelines can use record/replay for quick iteration. Snapshot diffs highlight the precise impact of small prompt edits, making code review much more like reviewing normal code changes.

What would a deterministic setup look like?

Conceptually: fix decoding to greedy or nucleus with a fixed seed; pin model, tokenizer, and numeric libraries; use deterministic kernels; and ensure the same pre/post‑processing everywhere. For distributed or quantized inference, lock versions and settings so the same inputs traverse the same paths. The details are tricky, but the surface area is familiar to anyone who has ever pinned dependencies for a production service.

Trade‑offs and practical guardrails

Determinism can reduce creative diversity and sometimes costs a bit of throughput when using deterministic kernels. A pragmatic approach is tiered control: deterministic in tests, evaluations, and critical user flows; controlled randomness (with sampling) in ideation surfaces. Make the mode explicit, log it, and include it in your cache key.

How your workflow changes if we get this right

- Add prompt snapshot tests to CI and block merges on diffs.

- Introduce record/replay for incidents and bug reports.

- Adopt content‑addressable caches to cut cost/latency.

- Run smaller, faster A/B experiments with stable outputs.

- Version prompts/models like code, with approvals for regulated flows.

- Pin inference stacks per environment to avoid config drift.

Bottom line

If we defeat nondeterminism in LLM inference, AI stops feeling like a lab demo and starts behaving like dependable infrastructure. The result is better tests, faster iteration, cheaper operations, and safer user experiences. Even partial progress toward determinism is worth it—start by stabilizing your evaluation and CI pipelines, then expand to the user‑facing paths that benefit most.

Further reading and sources

- PyTorch — Reproducibility guide: https://pytorch.org/docs/stable/notes/randomness.html

- NVIDIA cuDNN — Reproducibility and deterministic algorithms: cuDNN Developer Guide — Reproducibility

- NVIDIA cuBLAS — Deterministic results and CUBLAS_WORKSPACE_CONFIG: cuBLAS — Reproducibility

- TensorFlow — Enable op determinism / TF_DETERMINISTIC_OPS: tf.config.experimental.enable_op_determinism

- JAX — Reproducibility guidance and flags: JAX FAQ — Reproducibility

- ONNX Runtime — Reproducibility guidance: ONNX Runtime — Reproducibility

- NCCL — Environment variables (algorithms, protocols): NCCL Env Vars

Make AI Features Production-Grade

Use Propel to catch regressions, stabilize outputs, and ship reliable AI-driven workflows.