AI Code Review Benchmarks (2026)

Can you trust a benchmark written by the winning tool?

Benchmark bias is common. Many benchmarks are authored by the same vendors they are meant to evaluate, which introduces bias in PR selection, labeling, and scoring.

To reduce this bias, we evaluated Propel amongst 6 other AI code review tools using an externally authored benchmark suite of pull requests across production Open Source repositories.

The benchmark scores on 3 standard metrics:

Precision: the percentage of flagged issues that are real problems.

Recall: the percentage of real problems that the tool catches.

F-score: the overall score that combines both precision and recall

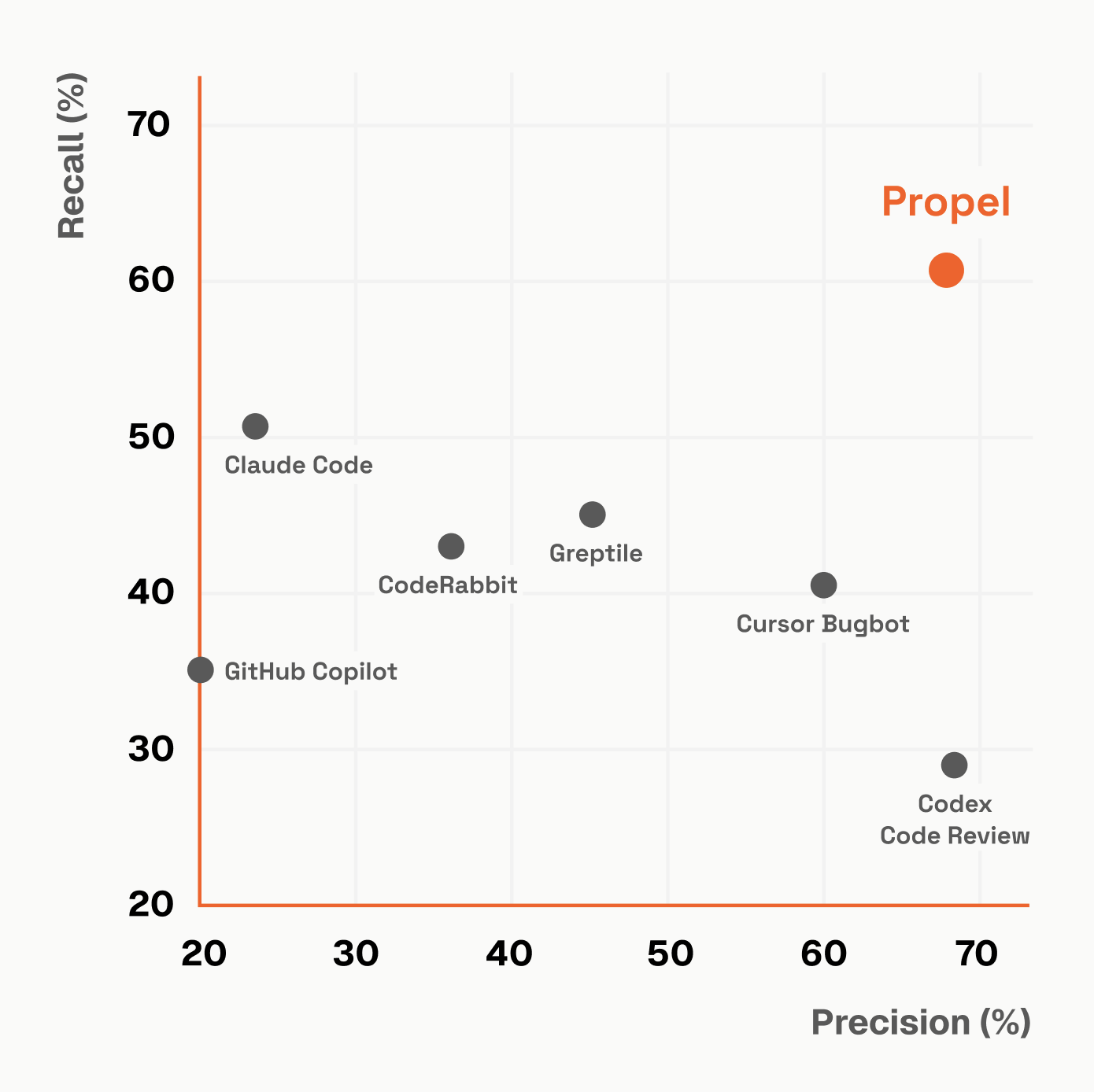

Results Overview

Across all evaluated repositories, Propel led with an F-score of 64%, followed by Cursor Bugbot with an F-score of 49%, and Greptile with an F-score of 45%.

Codex Code Review achieved the highest precision score alongside Propel at 68%. However, it had the lowest recall among the tools at 29%, indicating a bias toward precision at the cost of coverage.

Benchmark Results Data

Table sorted by F-score (highest to lowest) to highlight overall performance.

| Tool | Precision | Recall | F-score |

|---|---|---|---|

| Propel | 68% | 61% | 64% |

| Cursor Bugbot | 60% | 41% | 49% |

| Greptile | 45% | 45% | 45% |

| Codex Code Review | 68% | 29% | 41% |

| CodeRabbit | 36% | 43% | 39% |

| Claude Code | 23% | 51% | 31% |

| GitHub Copilot | 20% | 34% | 25% |

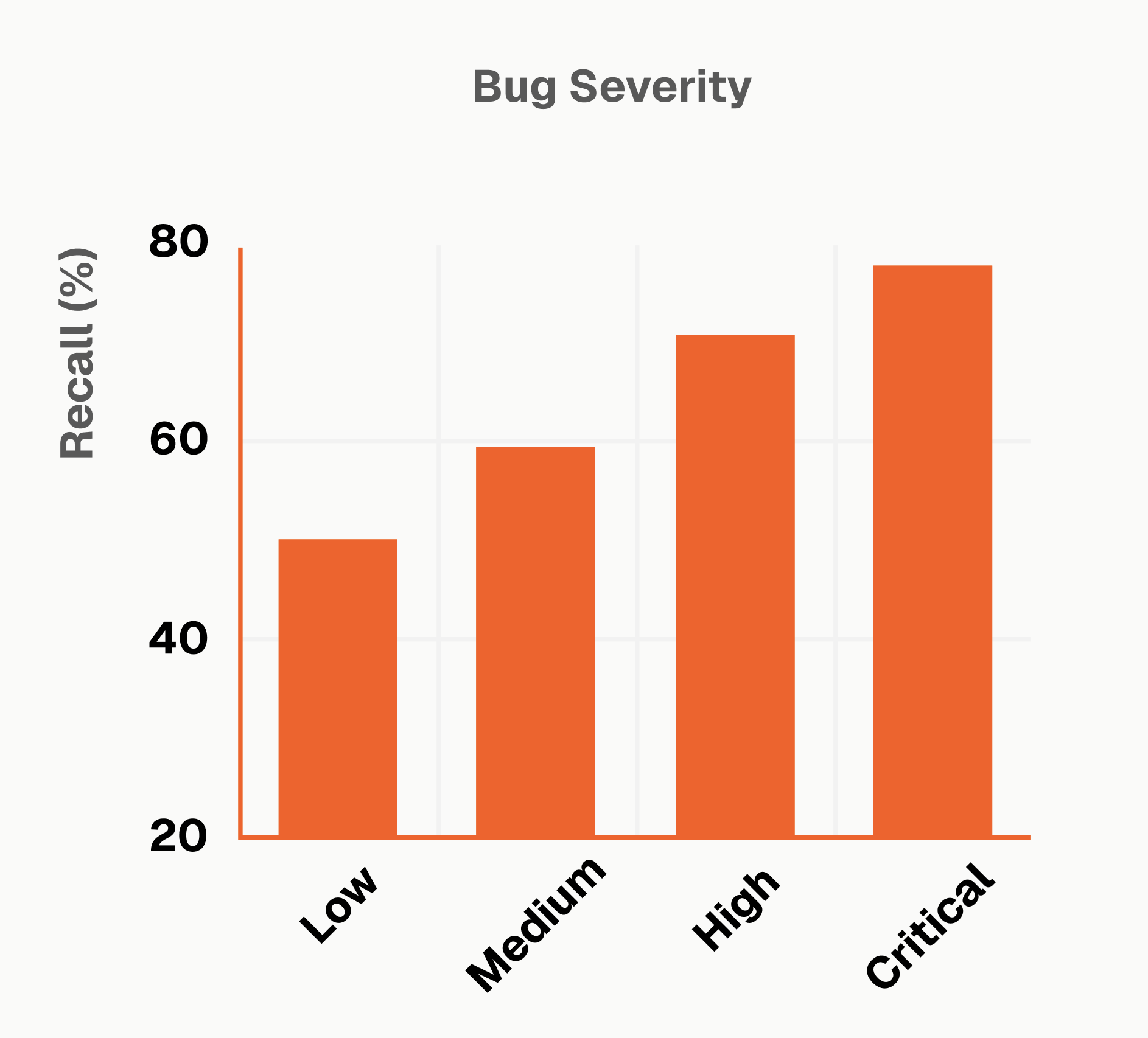

Bugs by Severity Levels

Benchmarks often treat all misses equally, but engineering teams do not. Missing a low-severity style issue is very different from missing a critical correctness or security bug.

Severity-level recall matters because higher-impact misses carry disproportionately higher risk for engineering teams.

Propel is strongest at catching higher-impact bugs:

Critical recall 77.8% and High recall 70.7%.

| Bug Severity | Critical | High | Medium | Low |

|---|---|---|---|---|

| Recall | 77.8% | 70.7% | 59.6% | 50.0% |

Benchmark Methodology

Independent Benchmark Data

Benchmarks are often biased toward their authors. To reduce this risk, we evaluated Propel using an externally authored benchmark suite created by another company. Propel did not influence repository selection, pull request selection, or labeling, and executed the evaluation only for its own results. The benchmark design, annotations, and data for other tested tools were produced externally.

Out-of-the-Box Configuration

Propel was evaluated using its base configuration with no repository-specific tuning, custom rules, or historical learning.

This reflects how teams experience Propel immediately after installation.

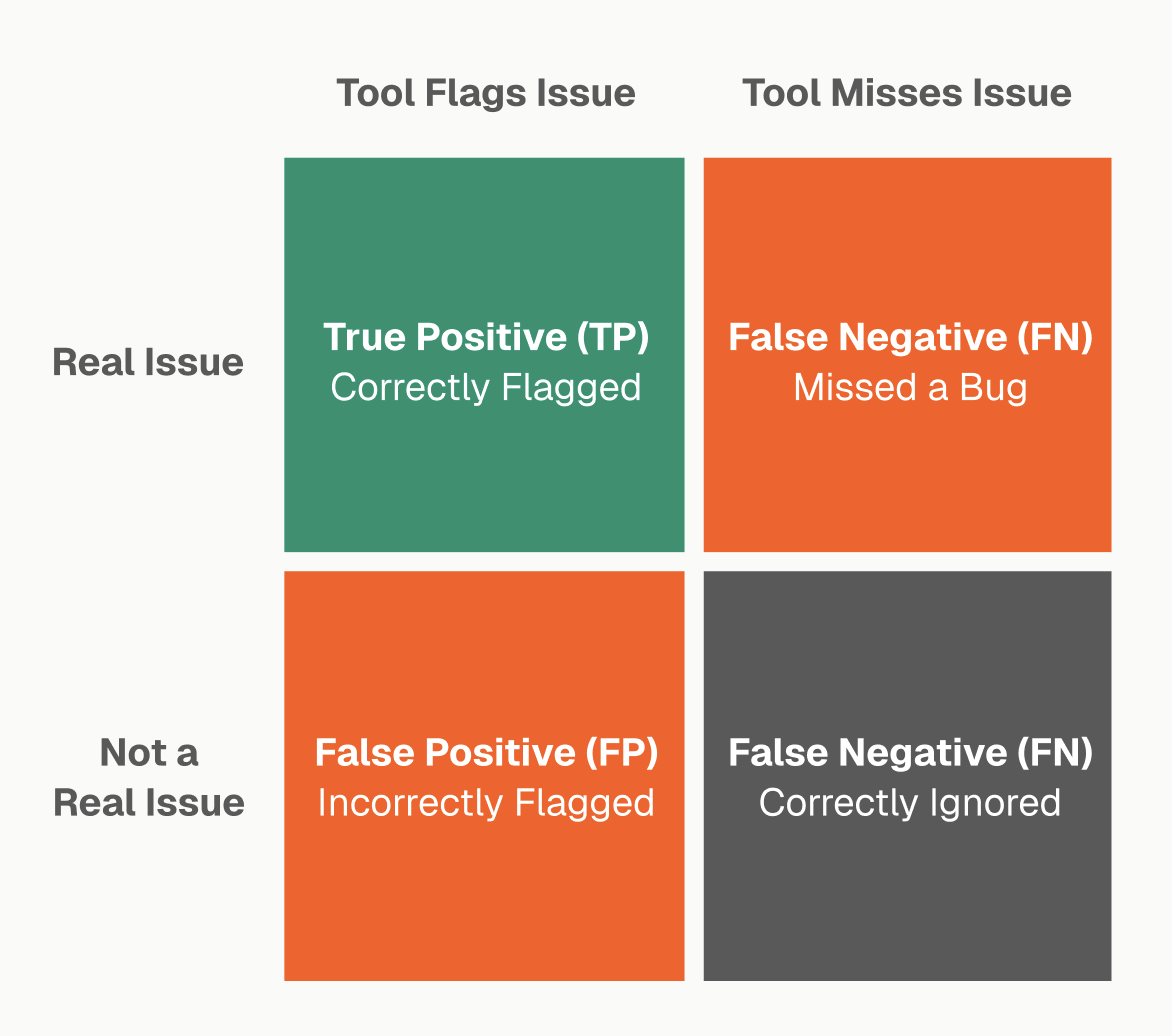

Scoring

Tools were evaluated using Precision, Recall, and F-score

- A true positive (TP): a real issue that is correctly flagged

- A false positive (FP): a flagged issue that is not real

- A false negative (FN): a real issue that is missed.

Precision measures how often reported findings are correct.

Precision = TP ÷ (TP + FP)

Recall measures how many real issues are successfully caught.

Recall = TP ÷ (TP + FN)

F-score summarizes both accuracy and coverage in a single metric.

F-score = 2 × (precision × recall) ÷ (precision + recall)

This formulation penalizes tools that optimize for precision at the cost of missing issues, as well as tools that maximize recall by generating excessive noise.

Conservative Adjustments

To make the comparison stricter:

- Propel is designed to catch more than just correctness bugs, including performance and efficiency issues. To ensure a fair comparison, Propel was configured to surface only bug-related findings for this evaluation. Performance, architectural feedback, code duplication, and similar categories were excluded via configuration.

- Two cases were excluded due to faulty or ambiguous benchmark data.

Note: Both decisions to improve test fairness did not increase any of Propel's final scores.

Conclusion

Engineering teams span a wide spectrum. Some want strong results immediately with minimal setup. Others want deep customization tailored to their company, repositories, teams, and individual developers.

With Propel, teams get exceptional out-of-the-box results without giving up deep customization.

Propel delivers strong performance out of the box on externally authored benchmarks, while also being designed to improve over time as it learns from your codebase, review patterns, and standards. Teams can start with immediate value and let performance compound as Propel adapts to how they build software.

Ship faster. Ship better. Ship with Propel.

No credit card required. 30-day free trial.