What Does LGTM Mean in Code Review? When to Comment (and When to Wait)

Quick answer

LGTM stands for Looks Good To Me. In code review, it signals the reviewer believes the pull request is ready to merge. Mature teams only use LGTM after automated checks, ownership policies, and risk reviews pass; otherwise it becomes shorthand for a rubber-stamp approval.

The internet adopted LGTM long before today's automation-heavy workflows. In 2025, the comment still shows up in nearly every repository, yet its meaning varies wildly. This guide clarifies where the phrase came from, how to use it responsibly, and how Propel Code keeps LGTM from approving bad code.

LGTM vs. other approval phrases

| Phrase | Typical meaning | Merge required? | Propel Code automation |

|---|---|---|---|

| LGTM | Reviewer approves with no outstanding blockers. | Yes, once checks and policies pass. | Propel Code verifies risk score, ownership, and policy gates before allowing merge. |

| Ship it | Final go-ahead after approvals and releases are aligned. | Yes, usually by release owner. | Propel Code can auto-merge when ship-it label appears. |

| PTAL | Author asking for another look; not an approval. | No. | Propel Code notifies owners when PTAL shows up without a response. |

| Nit | Minor suggestion; optional before merge. | No, unless policy dictates otherwise. | Propel Code classifies comment severity and tracks nit acceptance rates. |

Where did LGTM come from?

The phrase dates back to early open source mailing lists. Google popularized it in their internal review tooling and Gerrit workflows, and it spread across GitHub, GitLab, and Bitbucket. The simplicity makes it a perfect quick approval, but that same brevity is why teams now question whether LGTM represents real review or just momentum.

In AI-powered environments, LGTM needs guardrails. Propel Code keeps the original intent intact by pairing every LGTM with automated risk scoring, policy checks, and a structured summary that documents why the change is safe.

When should you actually comment LGTM?

- All checks are green: Static analysis, tests, and deployment previews have passed. If you rely on Propel Code, confirm the PR's risk score sits in the safe range.

- Ownership requirements are satisfied: Code Owners or domain experts have weighed in. Propel Code policies can require approval from specific teams automatically.

- Context is documented: There is a clear summary and roll-forward plan. Propel Code attaches an AI-generated brief so future readers understand intent.

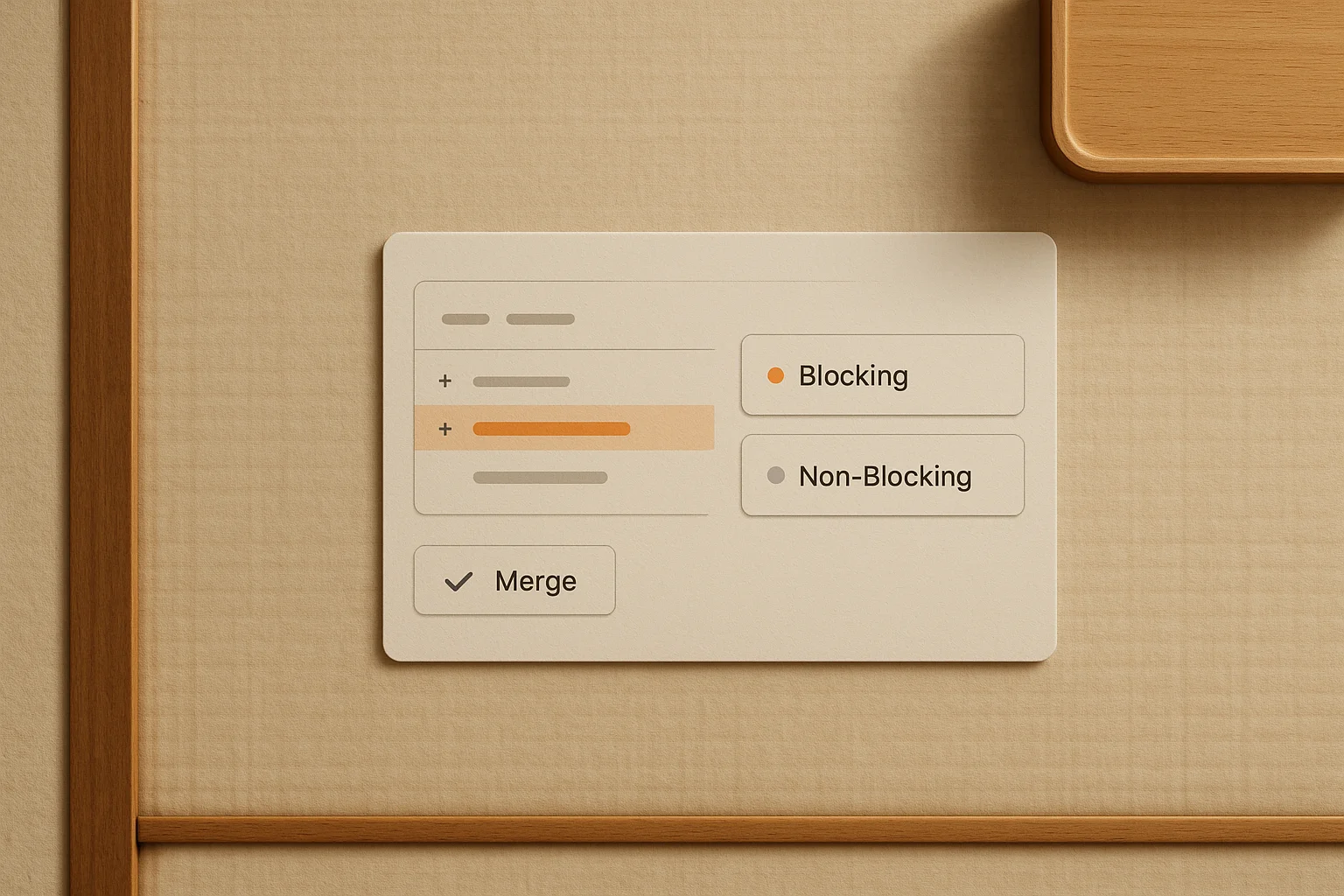

- No unresolved blocking feedback: Suggestions may remain, but nothing that violates standards or introduces risk. If something lingers, mark it as non-blocking explicitly.

Risks of casual LGTM comments

Many teams treat LGTM as a courtesy; surveys show new contributors equate it with a formal approval even when reviewers intended it as encouragement. The main risks include:

- Rubber-stamp merges: Approvals land before tests finish or before security scans run. Propel Code prevents this by blocking merges until required checks report back.

- Missing accountability: If everyone writes LGTM without reviewing the diff, code quality falls. Use analytics in Propel Code and review metrics dashboards to monitor participation.

- Confusing expectations: New developers may not know if LGTM requires action. Cross-link this post with our nitpicks guide so the vocabulary stays consistent.

How Propel Code safeguards LGTM approvals

Propel Code acts like a co-reviewer that never tires. Every pull request receives a risk score, a policy evaluation, and an AI-written summary that documents review context. When someone writes LGTM, Propel Code checks:

- Risk score thresholds: If the change touches sensitive systems or exceeds size limits, Propel Code routes it to senior reviewers before allowing merge.

- Policy automation: Branch rules, security approvals, and compliance sign-offs must pass. This mirrors the advice in our automation playbook.

- Comment classification: Propel Code detects unresolved blocking feedback. If a reviewer left a must-fix comment, the tool prevents an LGTM from merging prematurely.

Sample LGTM templates that set clear expectations

Approval with reminders

LGTM! Please make sure the integration test jobs finish before merging. Propel Code shows zero high-risk flags on this diff.

Approval with follow-up task

LGTM after you capture the new config in docs. Filing a follow-up card now and logging it in Propel Code so the team remembers.

Conditional approval

LGTM once the CI smoke test goes green. Propel Code flagged the flaky test in qa-service, so rerun before merging.

Declining LGTM

Not LGTM yet: security scan flagged two medium issues. Handing this to Propel Code's policy workflow so it blocks the merge until resolved.

Build a shared vocabulary around approvals

Clear language speeds reviews and reduces friction. Pair this article with a short glossary or link to our upcoming cheat sheet so everyone uses LGTM, nit, and heads-up consistently. You can embed that glossary directly into Propel Code's reviewer prompt so AI-generated comments match team tone.

LGTM readiness checklist

- Automated tests, linting, and security scans are green.

- Propel Code risk score is within your team's safe band.

- Required reviewers or Code Owners have signed off.

- Open comments are marked non-blocking or resolved.

- Deployment or rollback plan is documented in the PR.

FAQ

Is LGTM the same as clicking Approve?

On GitHub and GitLab, LGTM is just text until someone hits the Approve button. Propel Code can require an actual approval and confirm all checks complete before enabling merge.

Should I write LGTM if I only skimmed the code?

No. If you lack time, leave a comment requesting another reviewer or mark your feedback as non-blocking. Propel Code analytics highlight when reviewers skip thorough checks, helping leads rebalance workload.

Can LGTM include suggestions?

Yes, but label them clearly (for example, “LGTM, nit: consider extracting helper”). Propel Code separates must-fix and advisory comments so developers know what to tackle before merge.

How does AI change LGTM culture?

AI reviewers like Propel Code deliver context, highlight risks, and enforce policies in seconds. That lets humans focus on design discussions while LGTM stays a trusted approval, not a shortcut.

Stop Rubber-Stamp LGTM Approvals

Propel Code scores every pull request, documents the risk, and enforces review policies so LGTM comments only happen when code is truly ready.