Reviewer Load and Code Review Quality: What the Data Shows

Quick answer

Reviewer load is one of the clearest signals for review quality. When reviewers are overloaded, response times tend to increase and useful feedback drops. Track active review queue size and weekly assignments, cap load per reviewer, and route high risk PRs to fresh reviewers. Propel automates load balancing and keeps review quality stable.

Teams often track PR size but ignore reviewer load. That is a mistake. Even small PRs can suffer when assigned to overloaded reviewers. If you want higher quality feedback, measure reviewer load and build policies that spread reviews across the team.

TL;DR

- Reviewer load is the sum of open reviews and recent assignments.

- High load correlates with lower review quality and slower response time.

- Use WIP limits and routing rules to keep load within a healthy range.

- Automate load balancing to avoid reviewer burnout.

Define reviewer load in a way you can measure

Start with a simple metric that you can compute consistently. AWS recommends tracking reviewer load and review backlog in its DevOps guidance, which gives you a clear definition of how many reviews sit in a reviewer queue. Use this as a baseline, then add context such as risk level and domain expertise.

AWS Well Architected guidance on code review metrics

Example load formula

open reviews + assigned last 7 days

This definition is easy to compute and catches spikes during busy release windows.

What research says about load and review quality

An empirical study on code review quality found that reviewer experience, participation, and workload are key factors that affect perceived review quality. When workload rises, reviewers are less likely to leave deep, actionable feedback. Use this finding to justify load caps and a rotation strategy.

Empirical study on factors that impact code review quality

Reviewers also benefit when changes are small and scoped. Google recommends keeping change lists small to reduce review overhead and keep feedback focused, which can reduce reviewer load during busy weeks.

Google Engineering Practices: Small CLs

For internal sizing benchmarks that reduce review load, see our PR size data study.

Leading indicators that load is too high

- Time to first review climbs above one business day.

- Comments become short or repetitive, with many LGTM approvals.

- Follow up questions are not answered until after merge.

- High risk PRs wait in queue while low risk PRs merge quickly.

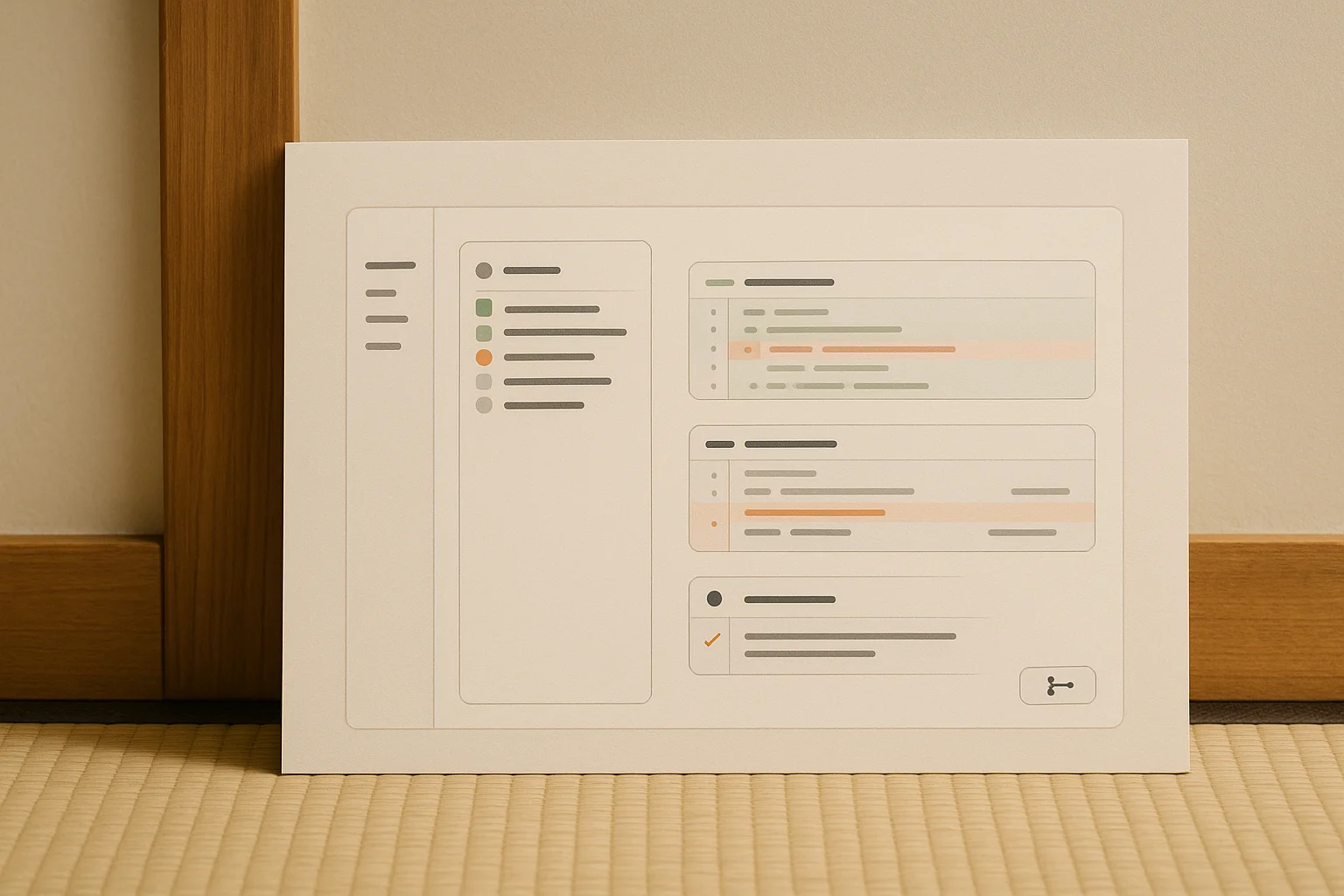

Build a reviewer load dashboard

Load is easier to manage when it is visible. A simple dashboard can reveal bottlenecks and help managers rebalance the review queue before it becomes a fire drill.

- Open reviews per reviewer, grouped by risk tier.

- Average age of open reviews and time to first response.

- Reviewer participation rate and comment depth per reviewer.

- Queue spikes during release windows or incident response.

Prevent load spikes during releases

Release windows and incident response are the most common causes of review backlog. Plan ahead by shifting reviewers, narrowing scope, and reserving reviewer time for critical changes.

- Freeze non critical changes to keep the queue small.

- Pre assign backup reviewers for high risk systems.

- Use shorter review SLAs for urgent changes, then revisit after release.

- Schedule review blocks on calendars so feedback is not delayed.

Set load caps and queue policies

Start with conservative caps and adjust as you learn. A common starting range is two to four active reviews per reviewer, depending on the size and risk of the PRs in flight. Use a queue limit plus a weekly cap to prevent slow accumulation.

Write a reviewer load policy template

A short policy makes expectations clear and easier to automate. Keep the policy simple so it is easy to explain during onboarding.

- Target response time for low and high risk reviews.

- Reviewer load caps by risk tier and team size.

- Escalation rules when reviews exceed SLA.

- Rotation cadence and backup reviewer list.

Balance load with routing and rotations

Load limits only work if PR routing respects them. Use CODEOWNERS and a backup pool for each area so reviews can shift when a primary reviewer is overloaded. For guidance on preventing reviewer fatigue, see our code review burnout guide and the metrics playbook in code review metrics.

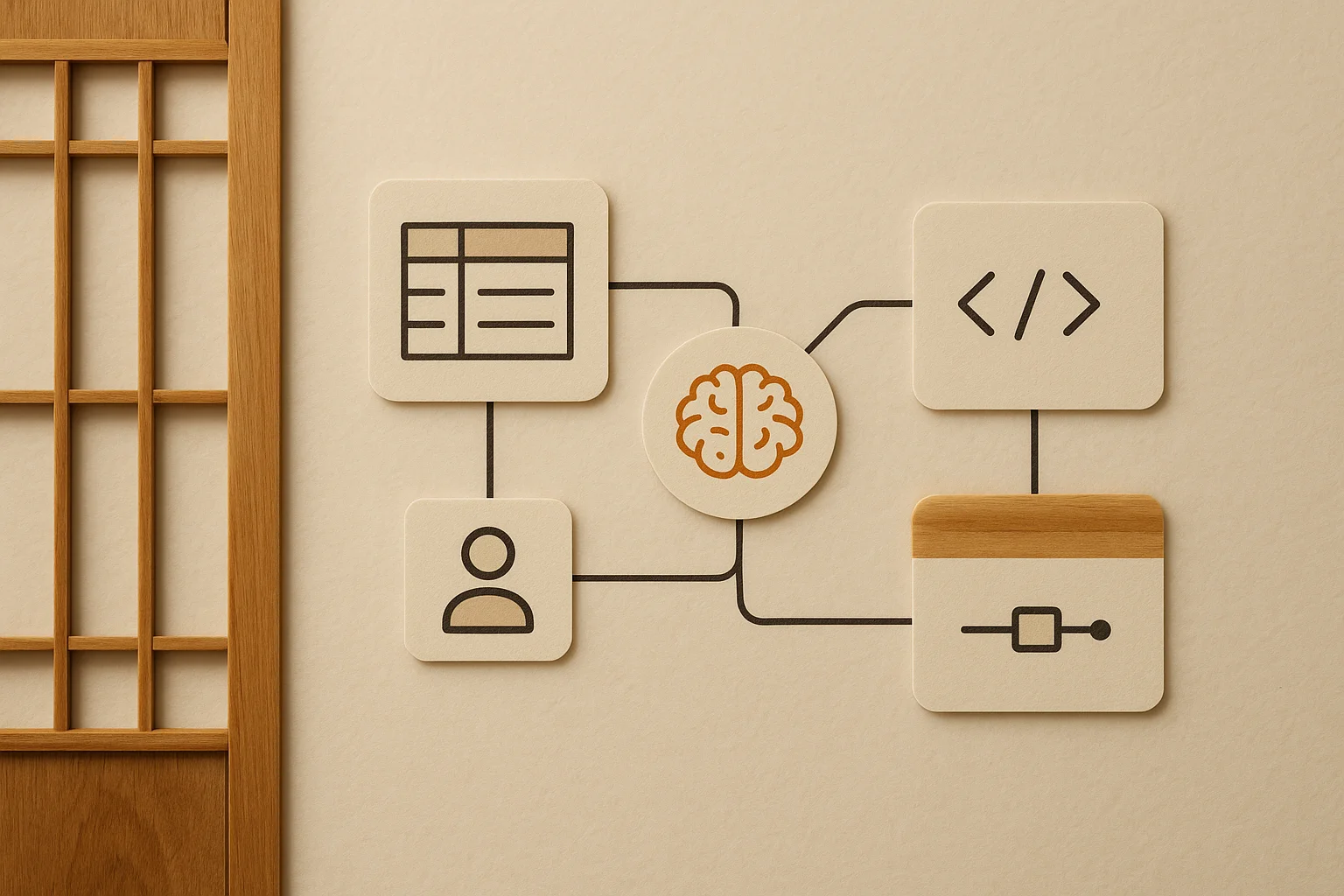

Propel automates load balancing for review quality

- Tracks reviewer load and routes PRs to available experts.

- Escalates stale reviews and reassigns when queues exceed thresholds.

- Applies risk based routing so high impact changes get fresh eyes.

- Surfaces load trends in dashboards to guide staffing decisions.

Next steps

Start by measuring time to first review and open review queue size per reviewer. Then map review outcomes and quality signals to those load buckets. Pair these findings with guidance from our pull request review best practices and our guide to reducing PR cycle time to build a review experience that protects quality and velocity.

FAQ

What is a healthy reviewer load?

For most teams, two to four active reviews per reviewer is a reasonable starting point. Calibrate the cap based on PR size, risk, and the experience of your reviewers.

Should senior engineers review everything?

Seniors should focus on high risk changes and mentoring. Spread routine reviews across the team so load stays balanced and ownership grows.

How do we handle urgent PRs?

Tag urgent changes with a separate policy and route them to a small on call reviewer pool. Keep a record so urgent reviews do not become the norm.

How do we measure load across repos?

Aggregate by reviewer, not by repo. If a reviewer is assigned across multiple repositories, the load should roll up into a single queue for capacity planning.

Balance Reviewer Load

Propel balances reviewer queues, routes PRs by ownership, and keeps review quality high even when teams are busy.