Files Changed vs Review Usefulness: What the Data Shows

Quick answer

File count is a leading indicator of review quality. Research on useful code review comments suggests usefulness drops as changes touch more files, even when line count is modest. Keep routine feature PRs within a small file footprint, split cross subsystem work, and track comment usefulness against files changed. Propel enforces file-based guardrails and routes reviewers by ownership so feedback stays high signal.

Line count gets all the attention, but file count quietly drives reviewer fatigue. Every file added to a PR forces reviewers to reload context and revalidate assumptions. The result is fewer useful comments, more rubber stamp approvals, and slower merges.

TL;DR

- Track files changed alongside lines changed, not just total diff size.

- Expect usefulness to drop when changes fan out across many files.

- Use file count thresholds per risk tier and split by subsystem.

- Route reviewers by ownership to keep context switching low.

Why file count is a different kind of complexity

A 200 line change in one file can be straightforward, while 50 lines spread across 50 files is brutal to review. Google emphasizes small, focused change lists, which reduces the context switching that happens when changes span many files. That is why file count is often a better proxy for cognitive load than raw line count alone. See the small CL guidance for the rationale and examples.

Google Engineering Practices: Small CLs

What research says about useful review feedback

A Microsoft Research study on useful code review comments found that usefulness is related to change size, including the number of files and lines modified. That result matches what most teams see in practice: broad changes reduce the share of comments that result in concrete fixes. Use the study as a baseline when setting your own file count guardrails.

Microsoft Research: Characteristics of Useful Code Reviews

Define what "useful" means for your data

You need a consistent definition of usefulness before you can correlate it with file count. Pick one or combine multiple signals below:

- Comment triggers a code change in the same file within 7 days.

- Comment is labeled blocking or must fix and gets resolved.

- Comment results in a follow up commit or added test.

- Comment is referenced in the PR summary or release notes.

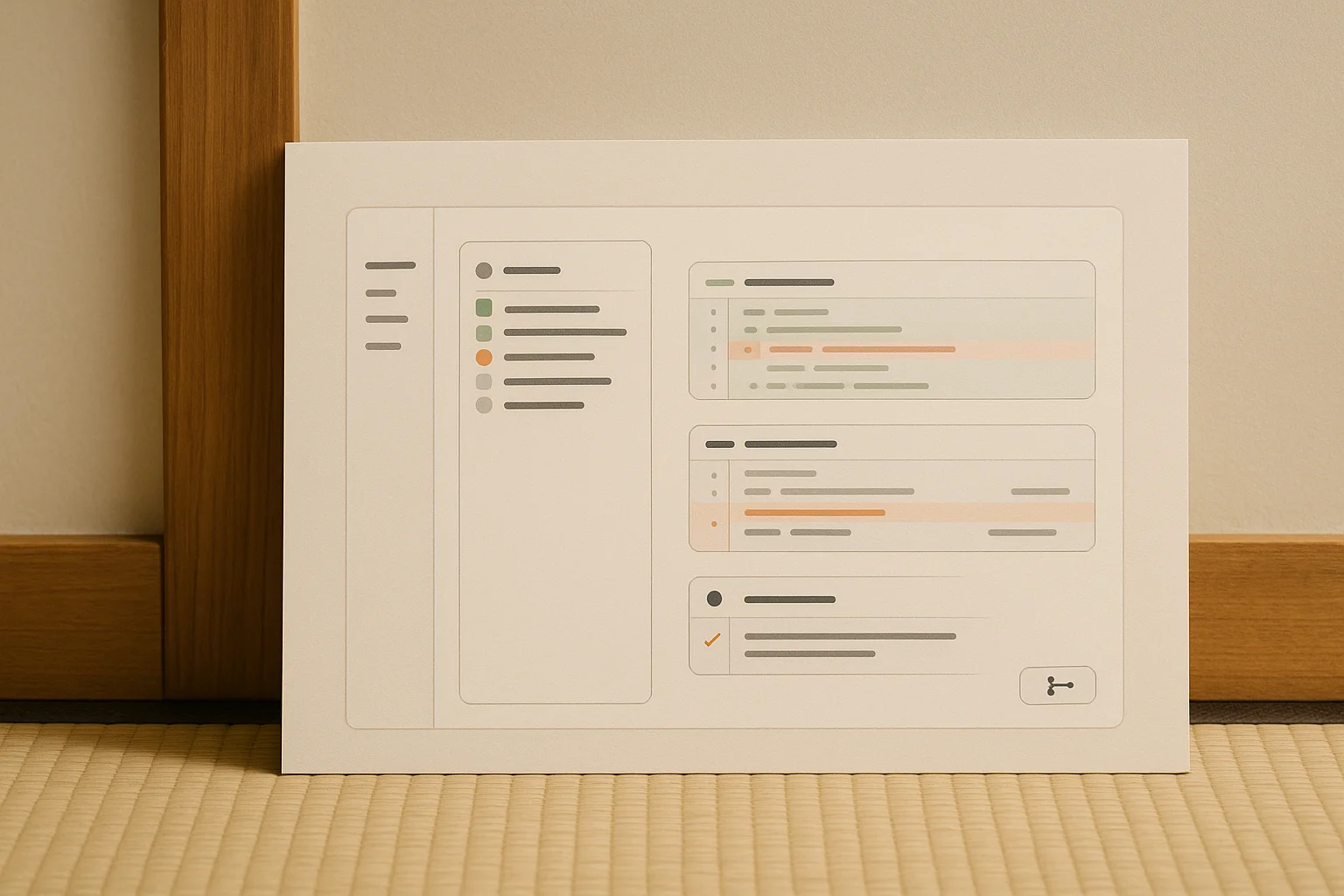

Data model for a files changed study

Core PR fields

files changed, lines added, lines deleted, commits, labels, risk tier

Review signals

reviewer count, time to first review, comment count, approvals

Usefulness signals

comment resolved, follow up commit, tests added, blocking tags

Outcome signals

reverts, hotfixes, bugfix commits, incident tags

Typical inflection points to test

Every repo is different, but many teams see a drop in useful comments once a PR spans too many files. Start with the ranges below, then calibrate with your own data.

Use a files changed vs lines changed matrix

File count and line count tell different stories. A simple matrix helps reviewers decide when to split or escalate. Use this as a visual in your review dashboard so teams can spot risk quickly.

Few files, few lines

Low review overhead. Fast approvals with light reviewer coverage.

Many files, few lines

High context switching. Require ownership review even if the diff is small.

Few files, many lines

Deep change in one area. Focus on design, tests, and edge cases.

Many files, many lines

Highest risk. Split by subsystem or use a staged rollout and extra reviewers.

Add file count to your PR template

Make file count visible before reviewers open the diff. A lightweight PR template forces authors to consider scope early and encourages smarter splits.

- Files changed: auto-filled from your CI bot or GitHub action.

- Subsystems touched: list of services or packages.

- Owner reviewers requested: primary and backup.

- Split plan: explain why this could not be split further.

Common traps that inflate file count

If file counts keep creeping up, watch for the patterns below. These usually indicate a process issue rather than a true requirement.

- Bundling formatting changes with functional work.

- Mixing refactors and features in the same PR.

- Including vendor updates or generated files without isolating them.

- Touching shared configs instead of using scoped overrides.

Split changes by subsystem, not by volume

When file count creeps up, do not split randomly. Split along boundaries that let reviewers stay in one mental model at a time.

- Infrastructure prep PR for configs, schema, or flags.

- API contract PR for interfaces and data model updates.

- Implementation PR per service or package.

- UI and UX PR scoped to a single screen or workflow.

- Observability PR for logging, metrics, and alerts.

Policy template for file count guardrails

Use policy tiers so teams know when exceptions are allowed. Combine file count with change type and risk so reviewers are not blocked on purely mechanical changes.

Route reviews to keep context switching low

Reviewer ownership reduces the penalty of higher file counts. Use CODEOWNERS, ownership tags, and review routing so each reviewer focuses on a consistent area of the codebase. For broader strategies, see our guide to pull request review best practices and our playbook on code review metrics.

Propel enforces file count guardrails automatically

- Sets policy thresholds for files changed by risk tier.

- Routes reviewers based on ownership to reduce context switching.

- Flags PRs that violate file count limits before review starts.

- Tracks usefulness rate so teams can refine thresholds over time.

Next steps

Start by measuring usefulness rate against files changed for the last 90 days. If your usefulness rate drops sharply after a specific file count, add that threshold to your review policy and validate the impact. Pair that with guidance from our PR size data study and the framework in our blocking versus non blocking guide plus the checklist in our code review checklist so reviewers can focus on the feedback that matters.

FAQ

Do generated files count?

Count them for visibility, but separate them in reporting. Track a second metric for human written files so the usefulness signal is not diluted by generated output.

What if a refactor touches many files?

Bundle refactors by pattern. Each PR should apply one change type, such as renaming a class or moving a module, and keep functional changes separate.

Should file count trump line count?

Treat them as complementary. A small line count across many files can still be risky because reviewers must validate each file for side effects.

How does this work in monorepos?

Scope by package. Require each PR to touch one domain or subsystem and use ownership routing so reviewers only see the files they maintain.

Additional guidance on change size can be found in the Chromium CL tips and related guidance. These resources are helpful when setting file and line count policies. Chromium CL tips on small changes.

Keep Reviews Focused

Propel helps teams enforce file count guardrails, route reviews by ownership, and keep feedback useful even when changes span many files.